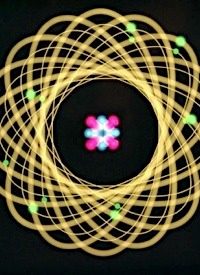

The European Organization for Nuclear Research (CERN) has developed the fastest proton accelerator in the history of science — the Hadron Collider, which accelerated its twin beams of protons to an energy of 1.18 TeV on November 30, breaking the previous world record of 0.98 TeV, which had been held by the U.S. Fermi National Accelerator Laboratory’s Tevatron collider since 2001. The collider used by CERN enables scientists to probe a little closer to the first moments of the universe which should help scientists answer questions which have now remained unanswerable. Although the new collider will help physicists learn more than they know now, experimentally, the fundamental theoretical problems of physics remain as elusive as ever.

Going back to the first trillionth of a second of the universe may appear to explain a lot about nature, but the experiences of the physical sciences over the last eighty years or so has invariably led to more questions arising out of the results of experiments. The explosion of subatomic particles which result from very high acceleration of particles — “atom smashing” — moves science away from simplicity and toward greater complexity.

Some of the theoretical explanations that are now serious scientific propositions, like Superstring Theory, have not led to the simplicity which was once the hallmark of great science. In fact, Superstring Theory itself, which is one of the “Theories of Everything” that physicists have been seeking since Quantum Physics postulated descriptions of reality, have led to almost surreal possibilities like the almost infinite proliferation of universe, which has itself now blossomed into at least five different Superstring Theories.

These require as few as ten and as many as twenty-six different dimensions in order to “work.” The physicists who have proposed these theories have models that work fine, but which cannot be experimentally validated in the way that science has traditionally been validated. Some of these theories postulate the existence of Tachyons (superluminal particles with imaginary mass) and some must banish that hypothetical particle.

Perhaps the most telling problem with any theory coming out of experimental study of the first nanoseconds of the universe is that Superstring physicists agree that the number of mathematical models that could explain reality is around 10500, which is a number effectively impossible for mortals to comprehend. There is no doubt that the latest atom smasher will yield valuable empirical data about the mass, vector, charge, spin, and other qualities of the zoology of the myriad subatomic particles created by collision, but even in this area of Quantum Mechanics, it is the statistical analysis and its analysis using probability theory which guides the hand of speculation.

None of this has worked out the way scientists thought it would when the two grand theories of Relativity, which explained the behavior of mass and energy at the highest levels of cosmic size, and Quantum Physics, which explained the characteristic of subatomic particles at the increasingly small levels of mass and energy, blossomed into a New Physics many decades ago.

Einstein and Heisenberg, the greatest proponents of Relativity and Quantum Physics respectively, proposed elegant answers to complex problems. This has long been the paramount factor in credible science. Although the Special Theory and the General Theory of Relativity were hard to grasp, the theories themselves were fairly simple. Indeed, most schoolchildren know E=mc2. Heisenberg’s Uncertainty Principle does produce a very weird reality, but the principle itself could be stated in a paragraph or two.

When the number of theories mathematically capable of explaining reality, using myriad dimensions which we cannot sense, becomes the best explanation for reality, then perhaps it is time for scientists to at least consider a fundamental rethinking of the limits and the purposes of science in our understanding of the universe.