A study released Tuesday appears to prove what many have long suspected and what anecdotal evidence has seemed to suggest for years: Google is manipulating your search results. The study — by search engine competitor, DuckDuckGo — states, “The editorialized results [of your searches] are informed by the personal information Google has on you, like your search, browsing and purchase history” and claims that Google’s “filter bubble” is particularly pernicious when searching for political topics.”

The new study is not the first time DuckDuckGo — whose search engine is built increasingly on open-source software and does not track users — has tackled this issue. Explaining the drive behind revisiting Google’s filter bubble, the new study states: “Back in 2012 we ran a study showing Google’s filter bubble may have significantly influenced the 2012 U.S. Presidential election by inserting tens of millions of more links for Obama than for Romney in the run-up to that election.”

The 2012 study was the impetus behind an independent study by the Wall Street Journal that “found that [Google] often customizes the results of people who have recently searched for ‘Obama’ — but not those who have recently searched for ‘Romney.’”

The new study begins by explaining the filter bubble:

Over the years, there has been considerable discussion of Google’s “filter bubble” problem. Put simply, it’s the manipulation of your search results based on your personal data. In practice this means links are moved up or down or added to your Google search results, necessitating the filtering of other search results altogether. These editorialized results are informed by the personal information Google has on you (like your search, browsing, and purchase history), and puts you in a bubble based on what Google’s algorithms think you’re most likely to click on.

The filter bubble is particularly pernicious when searching for political topics. That’s because undecided and inquisitive voters turn to search engines to conduct basic research on candidates and issues in the critical time when they are forming their opinions on them. If they’re getting information that is swayed to one side because of their personal filter bubbles, then this can have a significant effect on political outcomes in aggregate.

Since 2012, when both DuckDuckGo and the Wall Street Journal exposed Google’s filter bubble, the search (read: surveillance) giant claimed to have made changes. During the 2016 elections, then-candidate Donald Trump accused the company of manipulating search results in favor of Hillary Clinton and against him. Google denied having any political bias in its system.

So, did Google burst its own filter bubble and begin returning honest results to users’ searches? That is the question the new study sought to answer. As the study states: “Now, after the 2016 U.S. Presidential election and other recent elections, there is justified new interest in examining the ways people can be influenced politically online. In that context, we conducted another study to examine the state of Google’s filter bubble problem in 2018.”

In short, no, Google has not ceased manipulating the data; the filter bubble is intact. As the study says:

Google has claimed to have taken steps to reduce its filter bubble problem, but our latest research reveals a very different story. Based on a study of individuals entering identical search terms at the same time, we found that:

• Most participants saw results unique to them. These discrepancies could not be explained by changes in location, time, by being logged in to Google, or by Google testing algorithm changes to a small subset of users.

• On the first page of search results, Google included links for some participants that it did not include for others, even when logged out and in private browsing mode.

• Results within the news and videos infoboxes also varied significantly. Even though people searched at the same time, people were shown different sources, even after accounting for location.

• Private browsing mode and being logged out of Google offered very little filter bubble protection. These tactics simply do not provide the anonymity most people expect. In fact, it’s simply not possible to use Google search and avoid its filter bubble.

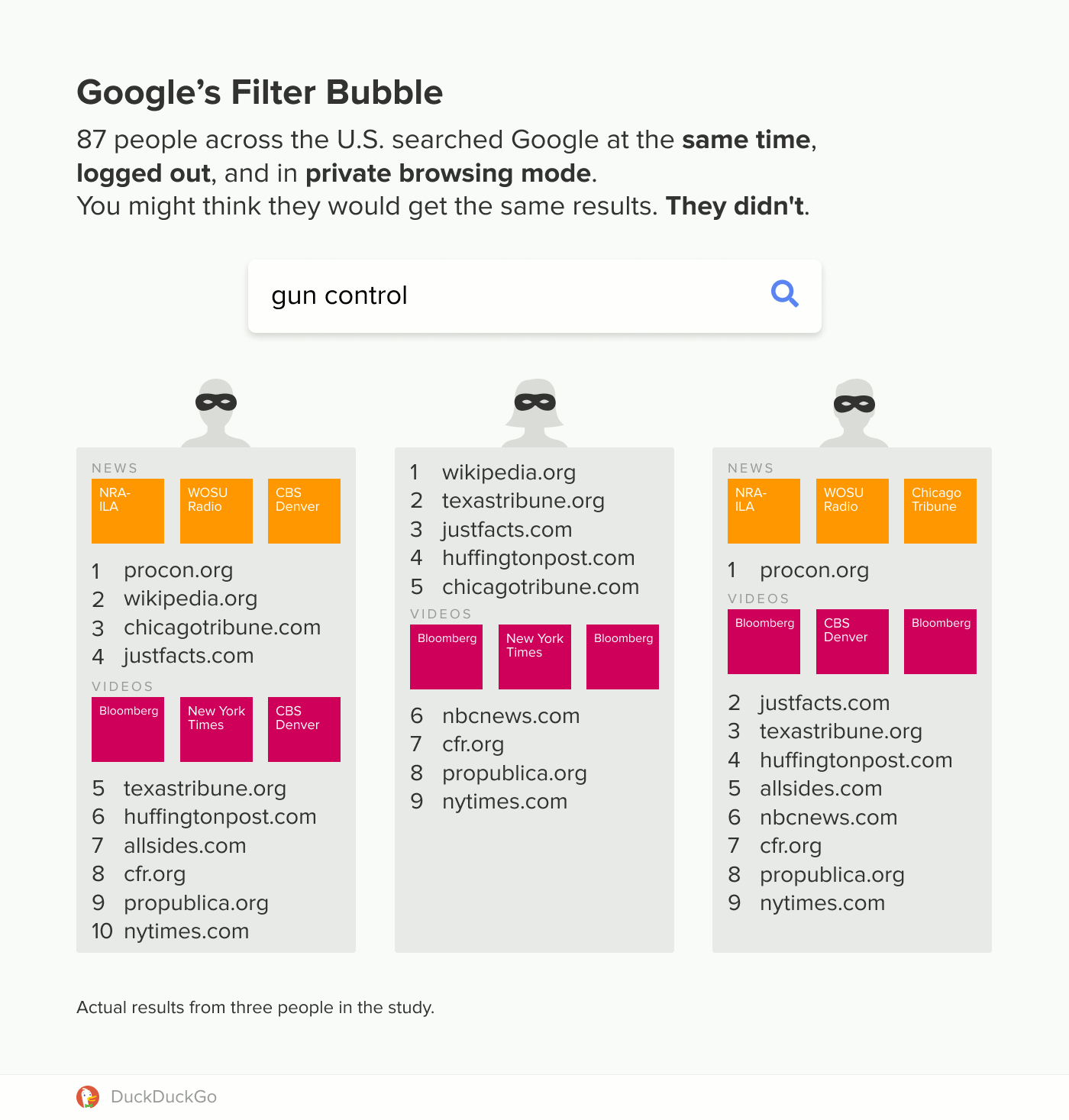

To measure the metrics of the results Google returns to users’ searches, DuckDuckGo “asked volunteers in the U.S. to search for ‘gun control’, ‘immigration’, and ‘vaccinations’ (in that order) at 9pm ET on Sunday, June 24, 2018. Volunteers performed searches first in private browsing mode and logged out of Google, and then again not in private mode (i.e., in ‘normal’ mode).” As the study states, “87 people across the U.S searched Google at the same time, logged out, and in private browsing mode. You might think they would get the same results. They didn’t.”

What the study discovered is outlined in four findings:

Finding #1: Most people saw results unique to them, even when logged out and in private browsing mode.

Finding #2: Google included links for some participants that it did not include for others.

Finding #3: We saw significant variation within the News and Videos infoboxes.

Finding #4: Private browsing mode and being logged out of Google offered almost zero filter bubble protection.

Let’s unpack each of those findings.

What finding #1 means — besides the obvious fact that Google is certainly filtering results — is that the company whose motto was once “Don’t be Evil” is not only breaking its promise of reform, but also its own self-proclaimed standard of behavior. Because the only way the company could provide results unique to a user is to maintain digital surveillance on that user — even when logged out and surfing in “private” mode. Since logging out and going private are clear signs that one wishes to avoid having their traffic monitored, “evil” is a great word to describe Google for doing it anyway.

Finding #2 serves as evidence — even proof — that Google is tailoring results instead of simply providing the most relevant answers to users’ searches. If any number of people are performing the exact same search at the exact same time, the only honest thing Google can do is return the exact same results. Anything else is a matter of dishonesty and manipulation.

Finding #3 is an almost exact repeat of finding #2, with the only difference being the news articles and videos suggested as answers to users’ queries. The point is the same, though. The same search should return the same results.

Perhaps finding #4 is the most disturbing. It bookends nicely with finding #1. They both mean that Google is surveilling users even when they are not logged into Google and even when they use their browser’s private browsing mode.

What all of these findings and the corresponding documentation means is that Google — far from turning over a new leaf — is still up to the same old tricks. Because based on these searches — all political hot-button issues — it is obvious that Google is projecting its political will instead of returning honest results.

The following graphic from the study illustrates the actual search results of three volunteers on the issue of gun control:

Notice anything interesting about those results? The lion’s share of them are liberal sites in favor of further curtailing the rights of law-abiding gun owners. While all three volunteers listed saw a news article from NRA-ILA, the only video links (sadly, the most-used source of “information” for Americans) were from liberal anti-gun media organs.

An honest return would show the most relevant information (based on popularity of clicks and other metrics). Rather than seeking to answer questions posed by users, Google is in the business of harvesting users’ data and filtering results based on what the company knows about those users. It is psychological warfare for the purpose of manipulating the thought processes of those users by controlling the information those users think is available.

Imagine if you went into your local library and searched the books there for information on a particularly controversial topic. Now imagine your neighbor conducts the same search at the same library using the same books. You would both get the same results, every time. The only variable would be your own biases. Period. But now imagine that the librarian knows a great deal about your habits — searches you’ve conducted in the past, books and magazines you’ve read, movies and television shows you’ve watched, products you have purchased — and uses that data (along with his own political biases) to filter the books he allows you access to. That is essentially what Google is doing.

Since DuckDuckGo is not harvesting data, the search engine has no way to tailor results — everyone searching for the same thing at the same time sees the same data.

The easiest way to demonstrate this is to sit down with a friend or family member and perform two sets of searches on each of your own devices at the same time. Do the first set using DuckDuckGo and the second set using Google.

After that experiment (assuming that privacy and honesty matter to you), you will likely switch to DuckDuckGo for all of your future searches.